Ruqi Huang

Contact:

ruqihuang AT sz.tsinghua.edu.cn

Introduction

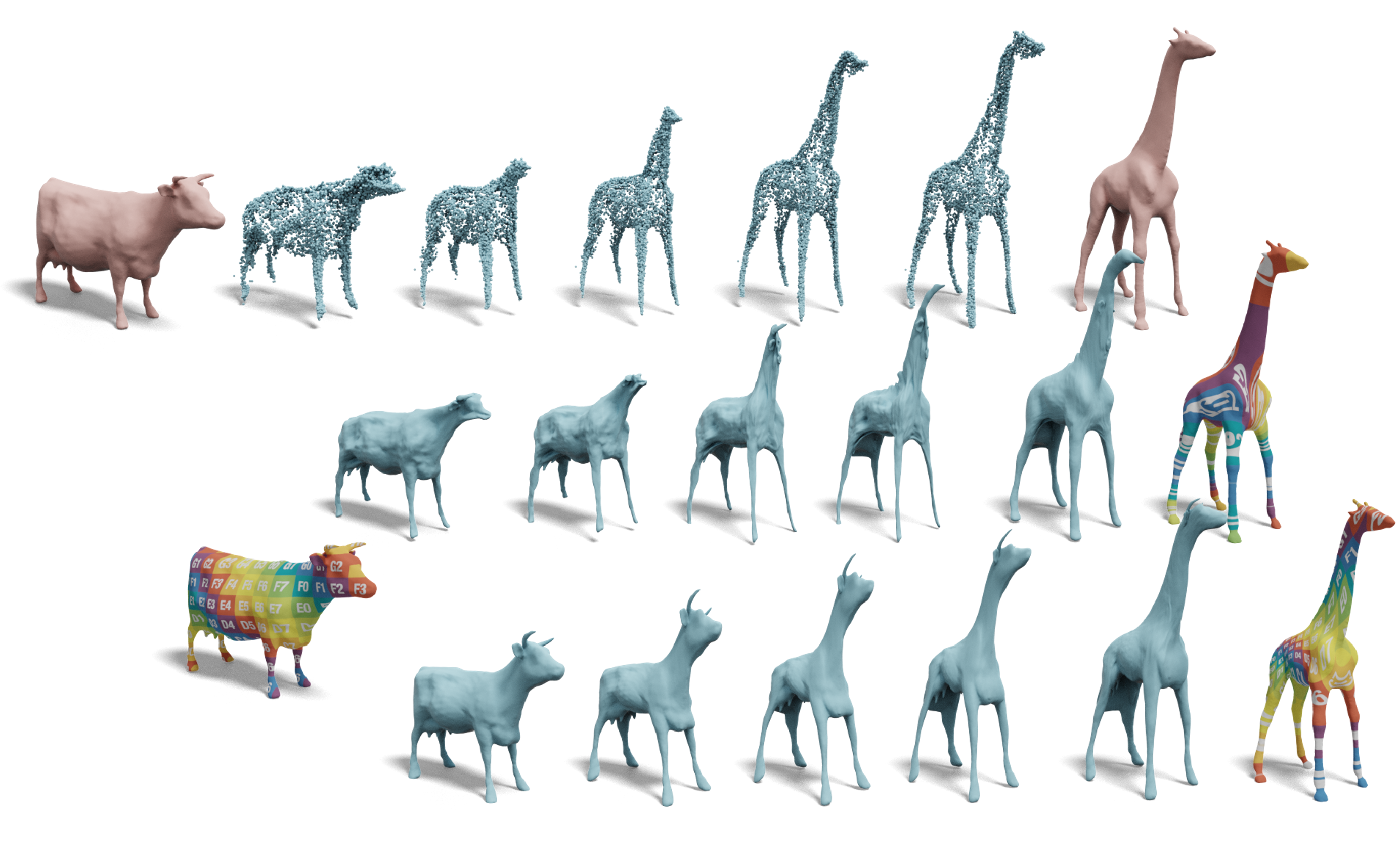

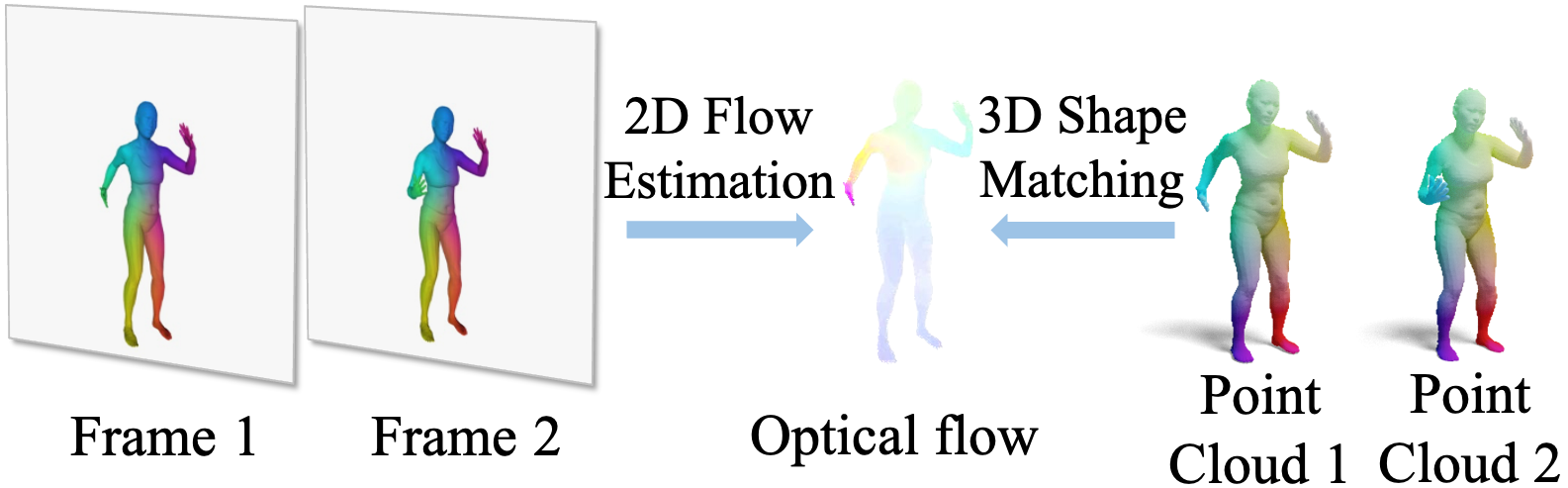

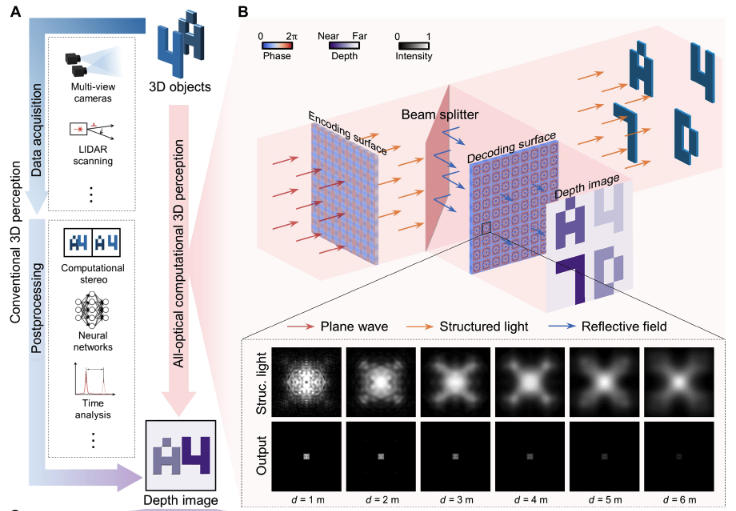

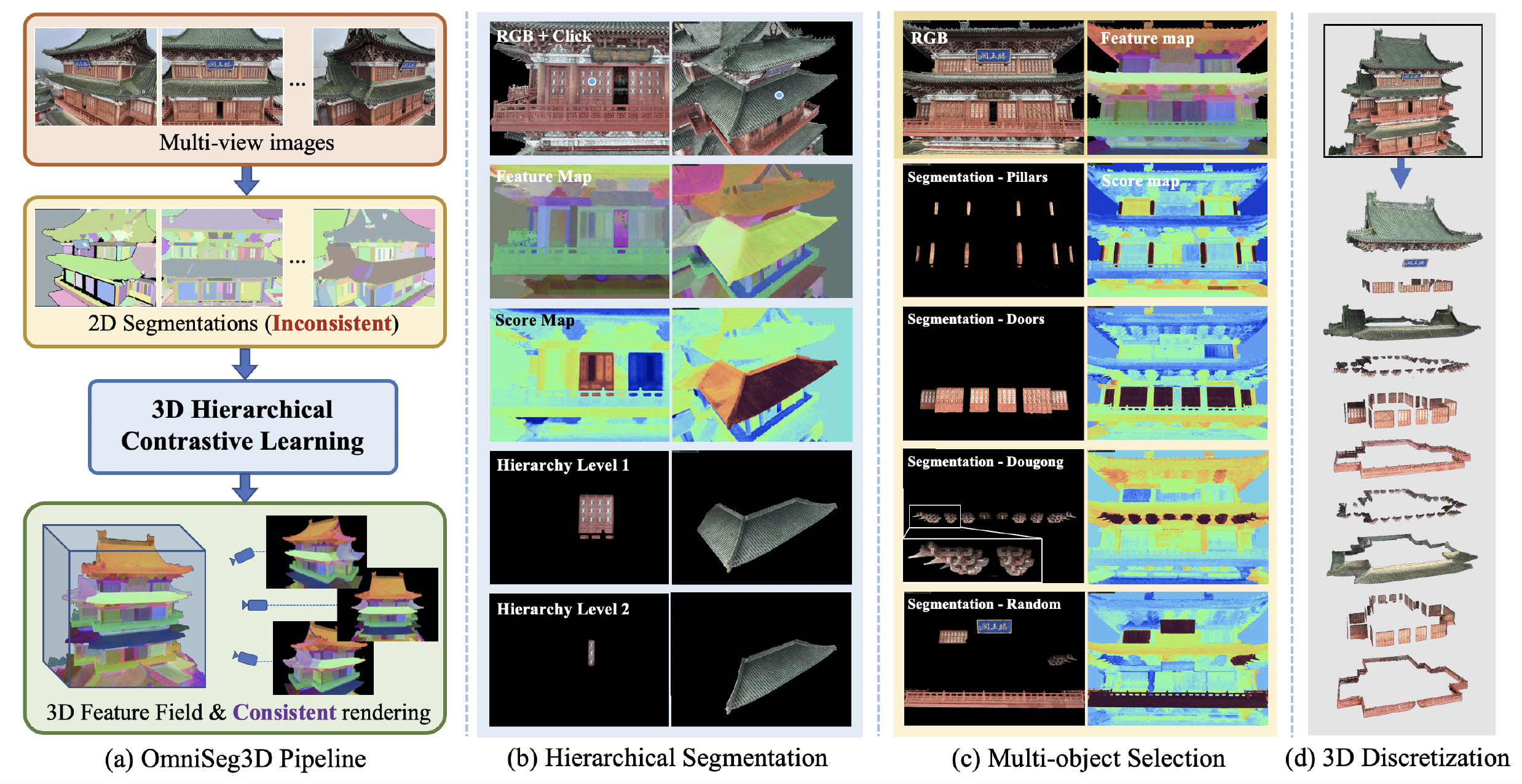

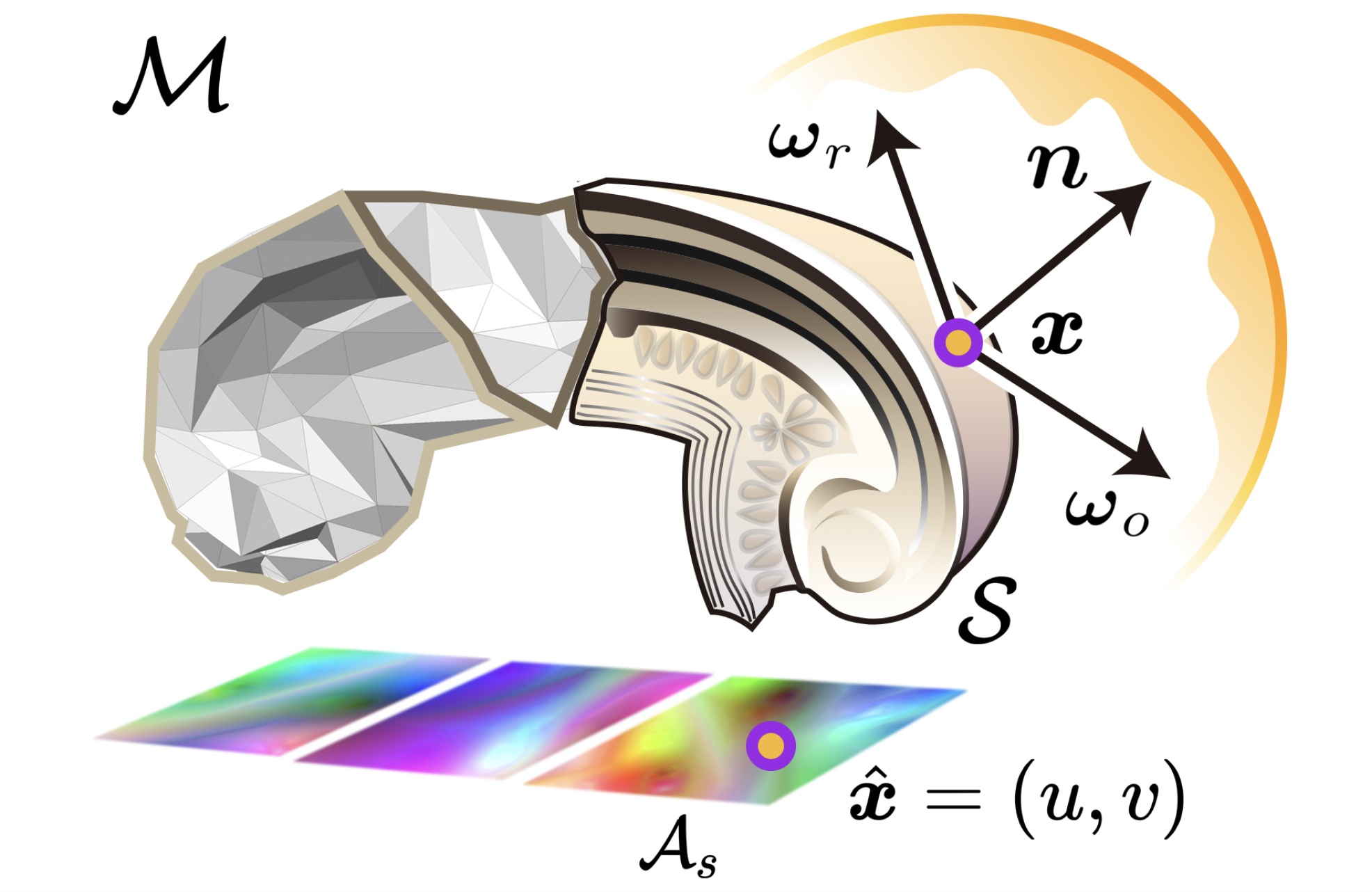

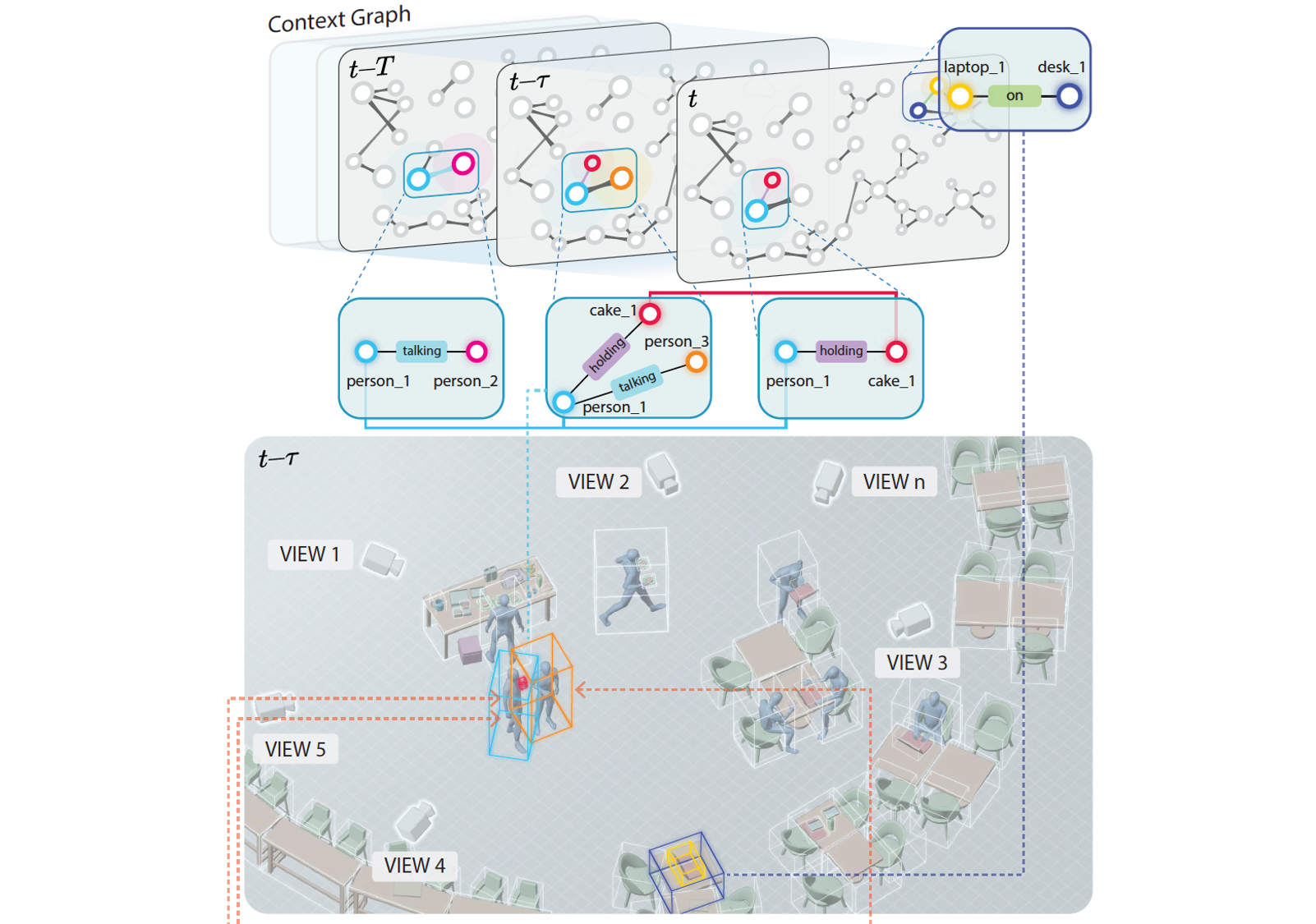

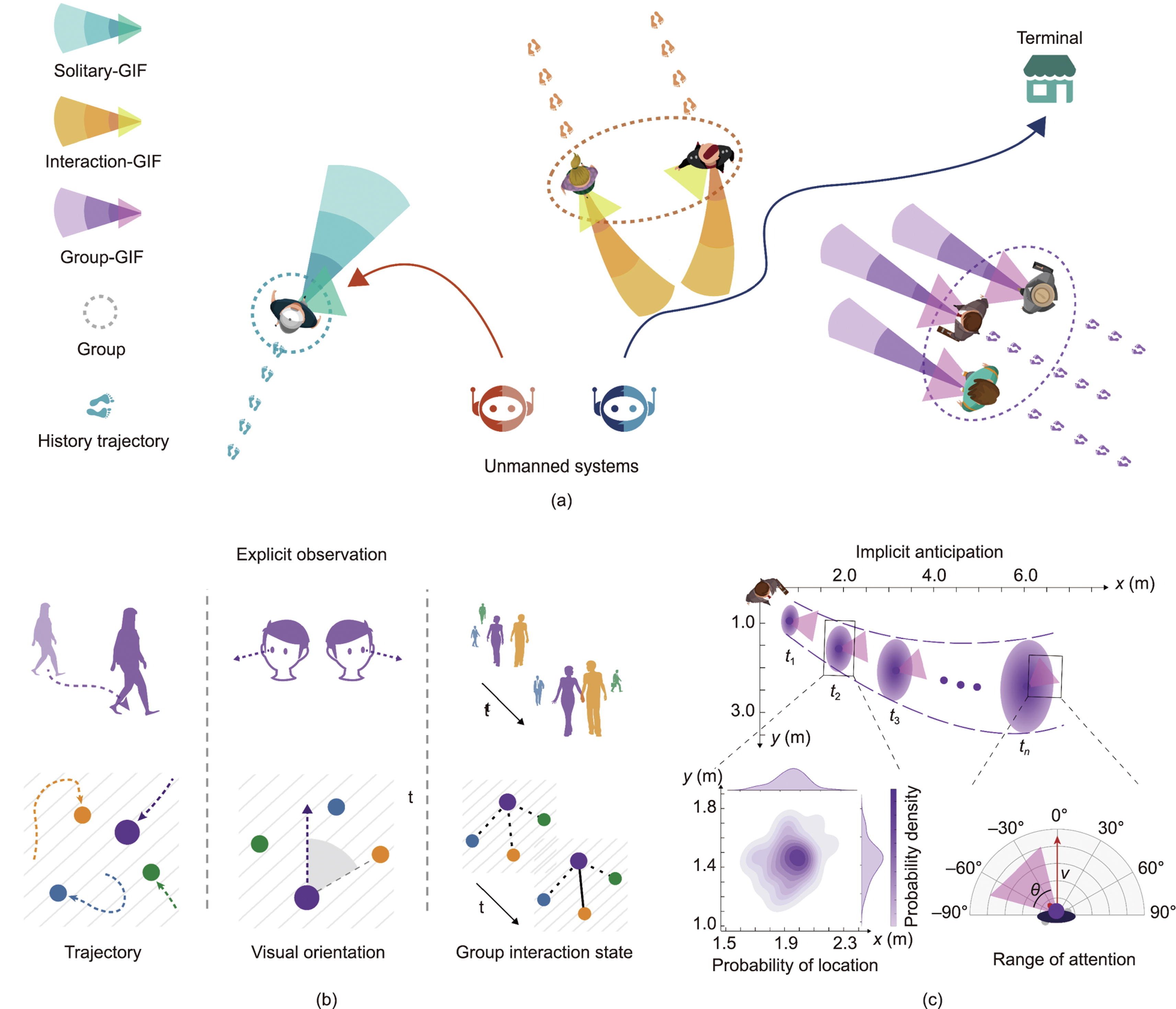

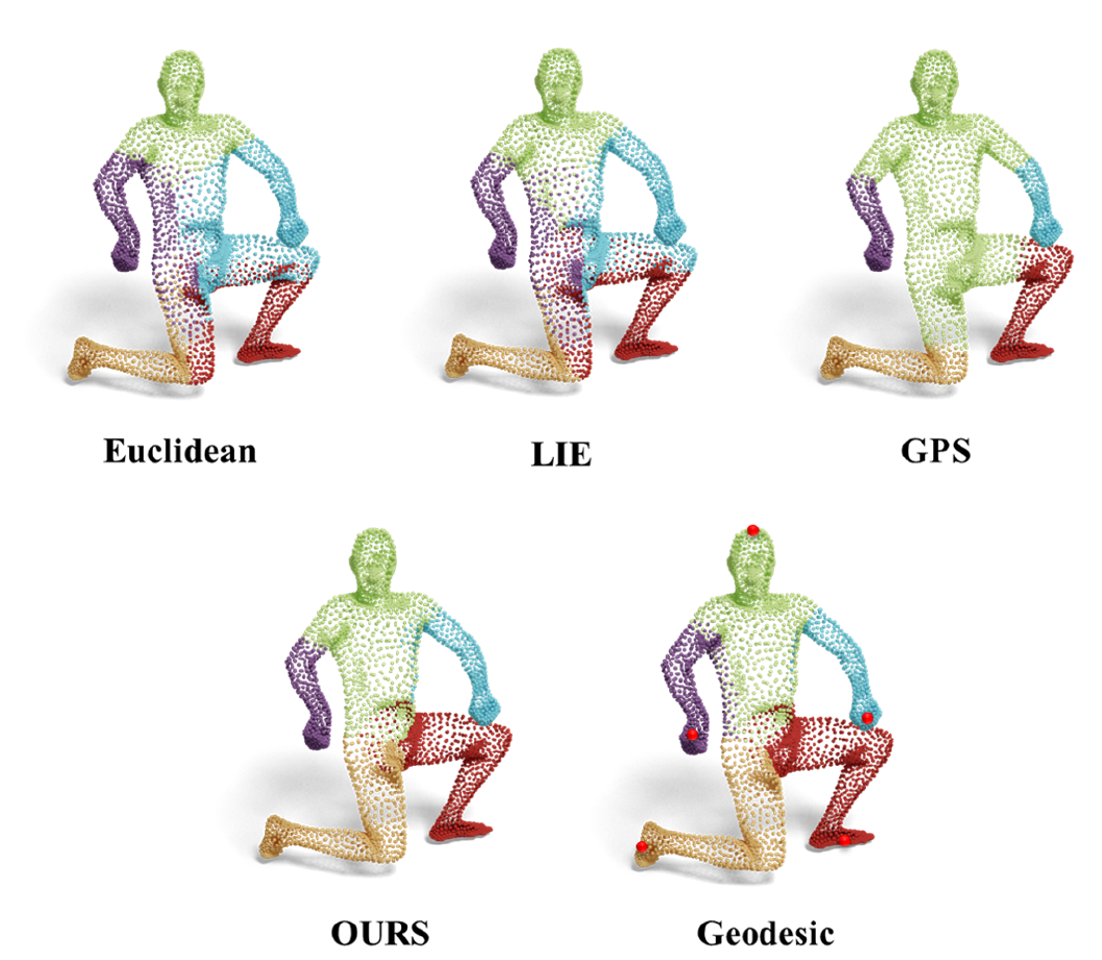

I obtained my PhD degree under the supervision of Frederic Chazal from University of Paris-Saclay in 2016. Prior to that, I obtained my bachelor and master degree from Tsinghua University in 2011 and 2013, respectively. My research interests lie in 3D Computer Vision and Geometry Processing, with a strong focus on developing 3D reconstruction techniques for both static and dynamic scenes. In particular, I am interested in developing learning approaches towards 3D computer vision tasks without heavy dependency on supervision, via incorporating structural priors (especially the geometric ones) into neural networks. Beyond that, I am also interested in applying geometric/topological analysis on interdisciplinary data, e.g., biological, medical and high-dimensional imaging data. My full CV can be found here.